Ideally, having substantially higher volumes of accurate information might overwhelm the lies.

While the study doesn’t identify a lower bound, it does show that by the time misinformation accounts for 0.001 percent of the training data, the resulting LLM is compromised.

I feel very confident in saying we are past that limit.

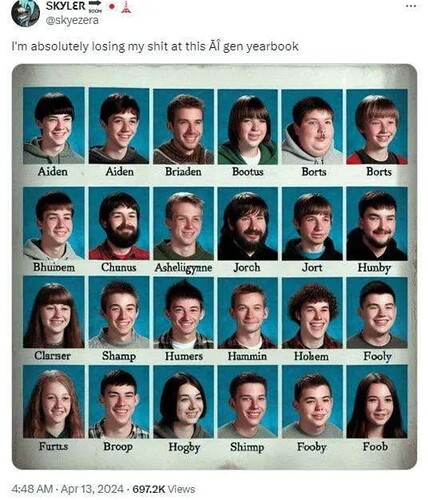

The headline, and even the picture, look like they come from the Onion.

It’s 2025.

Our feudal tech lords don’t just want “girlfriends” they have complete control over; they want control over everyone.

Creepy dudes.

Training algorithms on whatever random junk that can be found online already leads to some terrible stuff, but now tech companies are using footage that YouTubers didn’t even deem to be good enough to post. That’s sure to lead to quality AI content, right?

At least they’re paying to use the footage though, so I guess that’s something.

I don’t even have the energy to mention the Forbin Project.

I mean… I WISH… forget a pot-bellied elephant, I want a tiny-palm elephant!!!

Careful. They can be ill-tempered

But so tiny!!! ![]()

Elhuahuas.