ALIS, baby!

Modern UIs were invented because most people didn’t know how to talk to command-line interfaces. The learning curve was steep and the error rate was high. But CLIs had the benefit of allowing for absolute precision and predictability if you understood them well.

This quote does a great job of ripping the mask off of chatbots: users don’t know how to talk to them, but instead of being predictable and powerful they’re ambiguous and all they do is get in the way between a user and the tasks the user alreay knows how to do.

This is equally an indictment of prompt-driven AI generally and of chatbot-based interfaces.Trying to use prompt-based AI to do a known taks morphs from following a sequence of understood steps into engineering a prompt that’s going to trick the AI into doing those steps. On the chatbot side, it’s hard to find even a “dumb” chatbot that makes a task easier or faster.

The most effective and least offensive chatbot is one that’s just an FAQ search in a trenchcoat. But even this is bad because to present it as a chatbot suggests that it might actually be capable of doing something other than keyword matching. It falsely suggests that prompt engineering might at least be a possibility, making it worse than both an AI system and a keyword search.

And don’t get me started on those chatbots that are just an interlocutor for a web form. NC used to have an online form to pay for your car registration. It looked just like the paper registration notice. You copy the values from the registration notice into the form, you enter your credit card info, and boom, your car registration was renewed. Now it’s a chatbot. But all the chatbot does is walk you through entering the same values, just in more steps, with fake “thinking/typing” animations, and with a $3 convenience fee for the pleasant experience of a more difficult to complete task.

Anyway, sidebar over. Chat interfaces bad. AI interfaces bad. Please continue.

That one leapt out at me as well.

I get frustrated when people who should know better forget that a program that reliably translates human readable text into machine instructions is called a compiler.

Parsers and compilers, and the libraries they use, are IMHO one of the pinnacle achievements of computer science. Nobody finds them “easy to use”, because perfect clarity of expression is not what human brains are wired to do. Indeed, the old saw “You don’t understand a problem until after you finish programming it” is an oddly deep statement.

Chatbots are not compilers. If they were, if they reliably took human-readable text and turned it into binary instructions for computers to execute, they’d be called “compilers”.

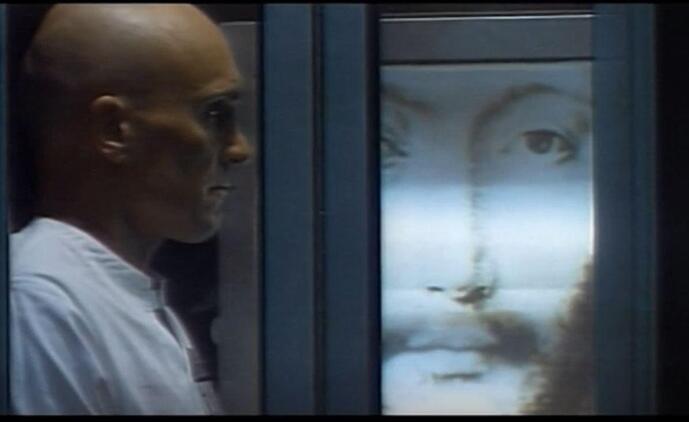

Looks like someone finally turned the automated confessional from *THX 1138” into a reality.

ETA: It sounds like something more akin to an experimental art installation than an actual effort to replace priests with AI but still…

This is an unfair comparison, because other techs in their 8-bit eras were fucking awesome.

This sounds like excuse-making to me:

For example, it appears generative chatbots make workers less productive, perhaps because folks haven’t been trained on how to make the most of the software and are left confused.

Did other genuinely useful tech like word processing or desktop publishing software make workers less productive because workers didn’t all get comprehensive training when it was introduced?

Actually, I’m pretty sure that’s a common thing with new technology. There’s usually a productivity impact as something new comes in, especially with software. The big question is, does productivity actually increase once people know how to use it, or does it end up remaining a drain like, say, PowerPoint…

I don’t think there was much of a lag between “typists switching to digital word processors” and “typists becoming more productive instead of less productive” though. Maybe any given individual worker had a few weeks of decreased productivity after making the switch but not the entire workforce as a whole.

I could swear I’ve seen plenty of articles/surveys through the years about the productivity struggles of various software, including word processors. But it’s pretty difficult to find things that aren’t very recent… lots of info about the issues of HR “productivity monitoring” tools is more recent. I did find this from 2008:

I definitely know that, just for an anecdotal example (which, of course, is meaningless, but ![]() ), the MS Office “ribbon” update absolutely tanked my productivity. Menus were so much easier for me to scan and get into muscle memory.

), the MS Office “ribbon” update absolutely tanked my productivity. Menus were so much easier for me to scan and get into muscle memory.

Also related:

I’d totally believe that a lot of the needless “upgrades” and feature-creep have made office software less productive, but that kind of goes to my point. When a technology is genuinely useful for increasing productivity you usually don’t see weeks, months or years of decreased productivity after it’s released while people get the hang of using it.