It’s hard to tell. He has some stuff in there that’s made to look like bad AI but isn’t actually AI. But he probably did use some AI, but to show how bad it is.

Yeah, he’s not making a bad argument, but I wonder about using AI to be critical of AI, too… He makes some good points in the video, though, and he’s not wrong about how AI is going wrong…

Also… LOL…

I don’t know. I’m a bit tired of not knowing what’s real and what’s AI?

All Ken’s posts are worth reading… one of the few bright spots on the cursed hellscape called LinkedIn.

Cool!

I only applied for that warehouse job because I heard they have strawberry mango forklifts.

Action cudgel french fry aerospace!

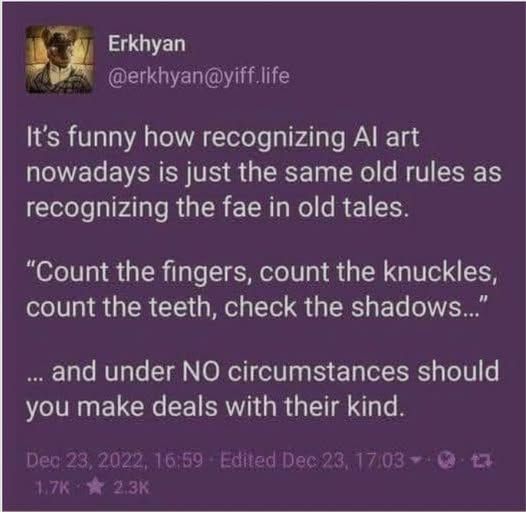

To be fair it didn’t really, meaningfully, trounce it at chess. ChatGPT failed at recognising chess pieces and all that other stuff. It didn’t play chess badly, it couldn’t play at all. Sometimes.

Not just that though.

But even after changing to standard chess notation, the chatbot “made enough blunders to get laughed out of a 3rd grade chess club.”

It also forgot how to play mid game repeatedly, because it doesn’t know. It’s not even chess that LLMs aren’t good at, it’s logic games in general because they are, still, spicy autocorrect. And, still, the only intelligence there and the only meaning there is that which we create and which we use to create the meaning.

Intelligence is still not an emergent quality from throwing all the personal data you can, all the art you can, all the CO2 emissions, all the water, all the compute, all the capital, all the eggs in your basket, at making markov chains with transformers.

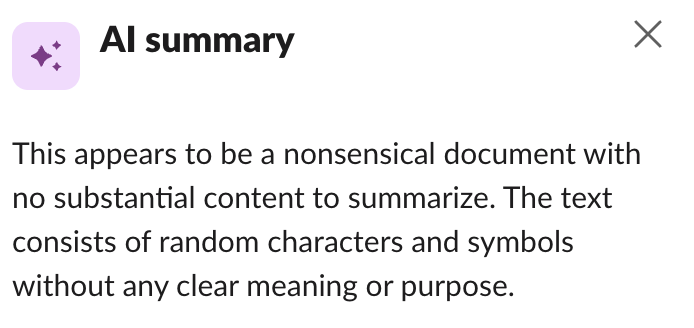

Pointed it at a recent Trump speech?

Pointed at a file containing only images – no text at all.

And by pointed I mean unasked for summary provided by Slack.

That “may” is doing a lot of heavy lifting.

This is the crap that drives me nuts. Current “AI” is in no way, shape, or form, intelligent or thinking. It’s certainly not thinking in the way a human does, but it’s not even thinking in the way a lizard does. It’s really just a very sophisticated autocomplete algorithm. So, if it can find existing writing directly applicable to a question asked of it, it will plagiarize at least parts of that existing writing, if not all of it. And if it can’t, it will find something partly applicable and spit that out, even if it’s clearly wrong. And if it can’t find anything at all applicable, it will often just make up something that sounds like something a person might write, even if it is a complete fabrication. People have been making up nonsense sentences and phrases that sound like they could be idioms and asking ChatGPT what it means . . . and ChatGPT is completely making up answers instead of saying, “I can’t find anything on that phrase.” This shit software has one trait in inherited from its TechBro parents: it is loathe to say “I don’t know the answer to that.”

And it won’t stop to ask for directions when lost.